The end goal for any Artificial Intelligence enthusiast is to create a model that perfectly mimics the human brain. The desire to reduce the gap between AI and humans is always ongoing and highly sought after. So far, AI has done a rather satisfactory job at everything visual.

Its capabilities of object detection have led to AI being used in self-driving cars, where it processes up to 2100 frames per second! But in order to make them more ‘human-like’, it is necessary to factor in inputs from other sensory organs us humans possess as well. To be more precise, we’re talking about the ability to feel and smell.

A group of researchers from the University of Toronto have developed stretchy, transparent skin that will help further upgrade the field of artificial ionic skin. This new technology called ‘AISkin’, is capable of recording complex sensations of the human skin.

A ‘sensing junction’ is created by clinging two oppositely charged sheets of stretchable substance. These substances are known as hydrogels, and the sensing junction works whenever the skin is subjected to strain, humidity, or changes in temperature. The ‘feel’ is then translated into electrical signals.

The researchers aim to place this artificial, adhesive skin on top of our real skin. They listed out an abundance of areas where the AISkin would play an important role. It can lead to the development of skin-like Fitbits where the adhesive layer measures various body parameters. It could also help make progress in the field of muscle rehabilitation and sports therapy, with the skin collecting data on how quickly a muscle heals after an athlete’s game.

The scientists are now adding bio-sensing capabilities to the material, which will allow it to measure bio-molecules in bodily fluids. If they’re successful, it could lead to some very interesting applications in the field of medicine, for example – smart bandages.

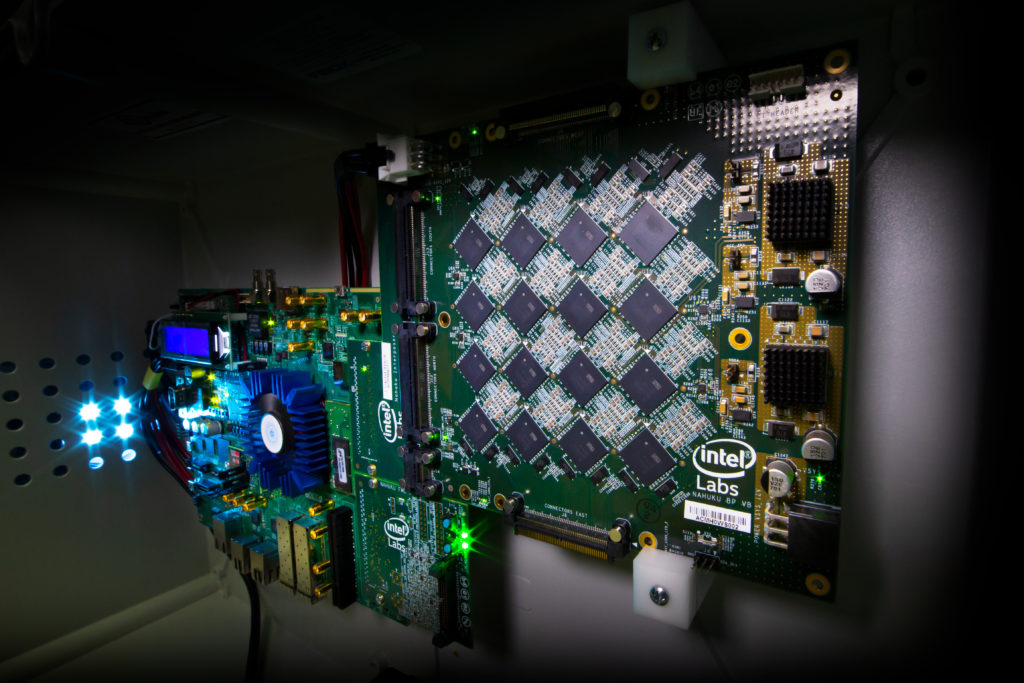

Coming to the advancements in the field of AI smelling substances, scientists at Cornell University have created a ‘neuromorphic’ artificial intelligence model which has proven to be excellent at detecting faint smells amidst background noise. The program’s success is due to the usage of its mutating structure which resembles the neural circuitry in a mammal’s brain.

The AI ‘smells’ by taking in electrical voltage outputs from chemical sensors in a wind tunnel that were exposed to gusts of scents such as methane or ammonia. The voltage input sets off a cascade of activity among the neurons, allowing it to recognise a variety of different scents.

The key differentiating factor between this algorithm and the other ones is that this system is capable of learning new aromas without forgetting others. The brain-inspired setup prepares the AI for learning new smells, which isn’t the case in regular neural networks which start off with a ‘clean slate’ of neurons. As newer ones are added into the mix, it can learn novel scents without disrupting the older neurons.

Usually, a regular AI requires you to retrain from scratch using samples of both the older and newer scents, but the brain-like structure can easily become attuned to new scents without hampering the previous learnings. As a result, the neuromorphic system learns quicker and is faster to implement older insights into newer cases.

In a battle of algorithms, the neuromorphic AI was pitted against regular old AI in a smell test. The neuromorphic AI was allowed to smell each scent only once, while the others underwent hundreds of training trials. During the test, each AI sniffed samples which only contained 20 to 80 per cent of the overall scent. The neuromorphic model identified the right smell 90 per cent of the time, whereas the regular models achieved an accuracy of a mere 52 per cent.

This leads us to the final quandary. How good can AI get? Can it beat humans in a particular task anytime in the near future? The answers to most of these questions are very dependent on the way you approach the scenario. The AI algorithm itself isn’t going to become as good as the human brain anytime soon, the neural network architectures we possess currently are very rudimentary in comparison to the complexities of the brain.

But, we could always improve the ‘inputs’ to the model to an extent where they’re able to perform tasks which are never going to be carried out by humans. For example, the human ear is only capable of hearing sounds in the frequency range of 20-20000Hz. This sort of limitation isn’t present in machines, and they can be used to listen to and analyse sounds not traditionally heard by us.

Similarly, a large number of animals have better noses than us and are capable of detecting smells to a far greater profundity. Machines could replicate that and learn to distinguish between smells which are present in very low concentrations, leading to their usage in multiple fields including air quality monitoring, toxic waste detection and medical diagnosis.

There are so many tasks such as these, where us as humans don’t hold up well. Here, the human brainpower isn’t what we’re lacking, but instead, our five senses aren’t sharp enough to sufficiently provide the brain with the data it requires. This is where computers step in by helping us analyse the information we can’t receive ourselves.

All in all, for AI to be on a level comparable to humans, we either need to improve the quality of ‘inputs’ provided to them or make the architecture on the level of the human brain. The latter isn’t happening anytime soon, so it’s up to us to make advancements taking advantage of the former.

Further Reading-