The most talked about topic in the tech world for years has been Artificial Intelligence. At the Google I/O 2018, Google showcased how much progress they have made in this domain and how they’re even closer to reaching their goal of incorporating AI in every Google service there is.

One of the stars of the show was Google Lens which was launched at the same event last year with a lot of buzz and excitement. It was not a perfect product back then and was available only to Google’s Pixel series of smartphones. Since then it improved a lot, gained many new features and was rolled out for iOS devices too.

This year Google announced three new features for Lens – Smart Text Selection, Style Match, and Real-time results. It was also announced that Lens will be expanding to a number of other Android devices.

What is Google Lens?

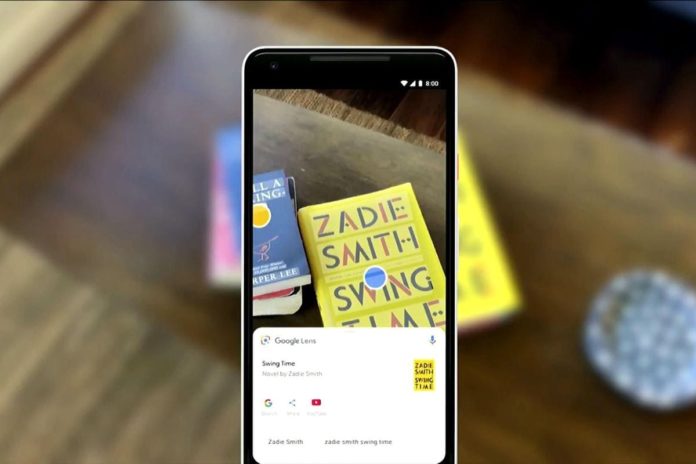

Google Lens is a platform capable of using a smartphone’s camera to scan a product, building, image or an animal and tell you what that object is. It is an amalgamation of Google’s prowess in computer vision and natural language processing and Google Search. All there’s to do is to just point the phone’s camera at the object and press the lens button on the camera. Google Lens will identify the object and will show relevant information on the screen. It’s not a separate app but is integrated into Android camera.

Some of the new features showcased at the I/O 2018 :

Smart Text Selection:

Smart Text Selection will let you scan words in real documents and let you use them on your phone. For example, take a book and snap a picture of any page in the book. Now highlight any text in that photo and you’ll get a menu letting you copy the selection to paste elsewhere. The words in the picture don’t even have to be perfectly aligned to be recognized by Lens. This will make scanning and searching quite faster and effortless.

Style Match:

Style Match is a feature that’s been implemented before by Amazon with Firefly and AR View to make the shopping experience better. Lens also does the same. If you like a shirt, scan it and a blue dot appears on top of it. Tapping that blue dot will show you a list of shirts with a similar style or sometimes the exact same shirt. This can be done with almost anything like shoes, jackets, lamps, backpacks etc.

Real-Time Results:

Real-Time results aim at improving the overall experience of Google Lens. With real-time results, instead of having to press a shutter button and then wait for Lens to process the image, the information will be overlaid on the objects in the viewfinder in real time. So if you move the camera around, blue dots will appear on all the items Lens finds. This indicates that these items are identified by the Lens and more information about them is available. Real-Time Results will not only reduce the time consumed to scan a product but will also make the service more user-friendly.

And Lens will soon work in real time. By proactively surfacing results and anchoring them to the things you see, you'll be able to browse the world around you, just by pointing your camera → https://t.co/A1nUSk8zsK #io18 pic.twitter.com/0xNI4dZez8

— Google (@Google) May 8, 2018

Obviously the Pixel phones will be able to use all the Lens features using its native camera app. Other than the Pixel phones, Lens will be available natively for a number of Android devices from brands like LG, Motorola, Xiaomi, Nokia, TCL, OnePlus, BQ, Asus, and Sony.

In fact, the LG G7 ThinQ is one the first phones to have Google Lens integrated into its camera app. It’s expected to be also featured in flagships from all the above-mentioned brands.

The updates will start rolling out in the next few weeks and only then it can be seen how well Lens performs in the real world.

Google Lens is still not a finished product, but seeing how much it has improved in just a year makes me extremely optimistic and equally excited. It’s just an example of how seriously Google takes AI and why it considers AI to be its future. Lens shows how AI can be useful to a daily consumer and gives a glimpse of how the future is going to look like with AI by our side.