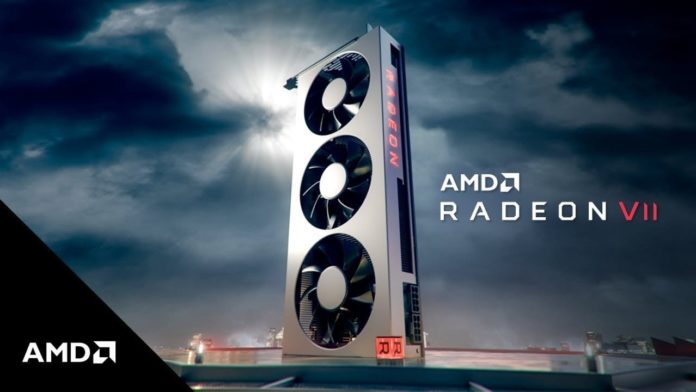

AMD’s Radeon Vega VII is just a few days away from launch, but one reviewer just couldn’t keep the results to himself and published them before the embargo. These benchmarks come from an Argentinian media outlet, hd-tecnologia. The post isn’t exactly a review, but a comparison of how the Radeon Vega VII holds up against its closest competitor, the NVIDIA GeForce RTX 2080.

HD-

- CPU: Intel Core i7-7700K (4.2GHz)

- Motherboard: ASUS Z270F Gaming

- Memory: 16GB Corsair Vengeance LPX (2x8GB) DDR4-3000MHz

- HDD: 2TB Seagate Barracuda 7200 RPM

- Monitor: Samsung U28D590D 3840×2160

- Drivers: AMD 18.50-RC14-190117, NVIDIA Driver 417.71 WHQL

AMD Radeon Vega VII vs NVIDIA RTX 2080 (Not Confirmed!)

It can’t be said for sure if these benchmarks are legit but I’m willing to bet that they are. As per these tests, the Radeon Vega VII and the GeForce RTX 2080 are more or less on par with one another, with the latter being slightly faster in some titles.

Further reading:A

- AMD’s Radeon Vega VII costs $650 to Build; Retail Price Pegged at $699

- AMD Radeon Vega VII Listed For Just $599 on Newegg

- Radeon Navi GPUs Coming in Q2 2019: AMD CEO, Lisa Su

- NVIDIA GeForce GTX 1660 Ti Listed By Russian Retailers

The RTX 2080 manages to beat the Vega VII in Wolfenstein II, The Witcher 3, Civ VI, Rise of the Tomb Raider, Shadow of War, Monster Hunter and Far Cry 5. The deltas are less pronounced at 4K compared to 1440p. The world’s first 7nm graphics card on the other hand wins mostly in AMD titles like Strange Brigade, Star Control: Origins as well as Battlefield V which despite being a GeForce title seems to fancy Radeon cards.

Well, that’s pretty much what I expected. I find it hard to recommend the Vega VII to anyone at its point, considering that despite being a tad bit slower than the RTX 2080, it costs the same and all the while lacks raytracing or DLSS, both of which are highlights of the Turing architecture.

+ 8 GB instead of useless raytracing or DLSS …. seems a good deal.

+8 GB of mostly unused memory…

or, really? you wont use the extra 516GBps of BANDWIDTH?

There are 180 euro cards with 8GB ram on the market. We have been at 8GB for many years. Many games require 8GB today, and some can more. Many applications require 8GB today, such as Resolve for high resolution video. It does’t look right to buy 700 euro GPUs with 8GB in 2019, or even 1300 euro GPUs with 11GB. 16GB is some room for games and applications to grow, and 700 euro for 16GB is the most affordable solution for high resolution video editing today.

RE 2 used 8GB+.

and more and more games will soon.

Still slower than 2080 in RE2 even after all that memory

Except faster @ 4k – because of all that memory. lol.

Faster by 0.4 fps. AMDumb

Wbt the price

One of the above comments says that the Radeon is slower than the GeForce in RE2. I would say it is faster where it matters. At 4K in RE2, the Radeon is faster.

‘ it costs the same… ‘

Really, AMD? Make the card cheaper. Then, at least those, who do not want RTX/DLSS, would have a compelling reason to buy it.

Yes, the +8GB is nice, but not enough to make up for it.

amd is already losing money on this if you care to read anything you would already know this the memory alone costs over 300. rtx cores literally kill your performanxe i would say vii is better.

lmao your bio makes it obvious you are an nvidia shill when benchmarks which you believe are true say that cards are basically same you should say your nvidia bias blinds you from recommending rvii because 1. DLSS is propertiary (typical nvidia) and microsoft is already puching open alternatives which rvii will support so that cancels for most games.

2. ray tracing is a joke at this stage no one wants half the performance for better lighting for just how many? 1 game ?

These are two very different companies both making us GPU’s – thank god for competition.

I am so deep into the nVidia Ecosytem that I can’t get out (GSync monitor etc.) and I can honestly say that both my 2080 and 2080 Ti need a real contender… from intel or AMD – if just to keep nVidia’s rampant greed in check.

I applaud AMD for their new card and will be keen to consider one over a 2080. I feel that the 8GBs on the RTX card is very low and designed specifically to keep nVidia users in the their upgrade cycle (just like with my GTX 780Ti 3GB). My GTX 780ti had roughly the same performance as a GTX 1060 but was hamstrung by its piddly frame buffer.

This AMD card, if it’s perfomcae is so close to the 2080, will be the perfect 1440p high refresh card – and the one of choice for many years to come.

The RTX 2080 and this VII (if the benches are to be believed) can be considered to be of the same near mythical excellence to 1440p as the GTX 1070/RX580 are to 1080p.

can’t tell it your comment is satire.

It’s not, because I agree with him completely. Anyone buying a $700 GPU with only 8GB of VRAM when game consoles packing 16GB are coming next year, and there are ALREADY games that can use more than 8GB if you’ve got it to spare. The RTX 2080 (& 2060) are Nvidia’s Fiji (AMD’s Fury/X/Nano), with the exact same mistake of seriously skimping on the VRAM (but unlike AMD, deliberately in Nvidia’s case).

For gaming not much value added other that the vram for future use, for content creator and productivity its a big value added

In the original article they state that AMD used the following platform in their testing followed by the list of components AMD used for their tests, I don’t think they’re genuine results, I’d go as far as to say they’re fakes in that they’ve used what AMD got and then tweaked the numbers slightly and added a couple of extra games then they compared them with RTX 2080 results and added educated gueesses for the few games they added to the graphs, It’s similar to what “Game Debate” did with their clickbait article yesterday, Inside the wall of text that accompanied their graphs there was a sentence that stated “The Radeon VII 16GB has not been released yet, so any comparisons on this page are likely to be unreliable.”

Plus AMD got a usable feature like freesync 2 not that stupid Ray Tracing that there’s no game supporting it like nvidia physx back in the days with only 6 or 8 trash games supporting it xD, AMD is Honest!

Why when using old CRT (17″AOC) monitors AMD/nVidia drivers fails to detect the previously set 1152×864 @ 75 Hz resolution and drops down to 1024×768 @ 60 Hz and I get a flickering log-in screen. My AOC model # P7S91 also fails to show up either in AMD Catalyst Control Centre or in nVidia control panel. I have been dealing with this from 2008. I have tried to communicate this to AOC but didn’t get a favourable reply. This is sometime resolved if I shutdown my PC and pull the plug of my monitor and wait for 2 minutes or so and then fire everything up again. Is this a result of faulty VESA channel of my VGA cable of my monitor ?. I have experienced similar problem on old CRT monitors.